Does Google Index Your robots.txt?

This is a contribution by SEO strategist Moosa Hemani. It has been slightly edited by myself, Tad Chef – the owner of this blog.

robots.txt is a protocol that helps search engines to find out which part of a website should not be included in its index. According to Wikipedia

“The Robot Exclusion Standard, also known as the Robots Exclusion Protocol or robots.txt protocol, is a convention to prevent cooperating web crawlers and other web robots from accessing all or part of a website which is otherwise publicly viewable.”

As an SEO, you must have tried this search operator in Google: [site:example.com]. This simply returns the pages from example.com that have been crawled and included in the Google index.

The Google bot does not crawl any pages that are ‘disallowed’ by the robots.txt file. Everything makes sense until now but

what if your robots.txt file itself started to appear in Google search results?

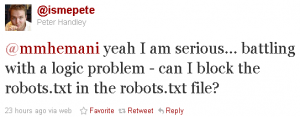

To be honest I thought somebody is poking fun at me. It doesn’t sound logical at all. After reading a tweet by Peter Handley aka @ismepete I took it seriously though:

He is one of the brightest minds in the search industry! Shocked, amazed and I guess somewhat a mix of both, I quickly jumped over to Google to see it for myself and guess what I found?

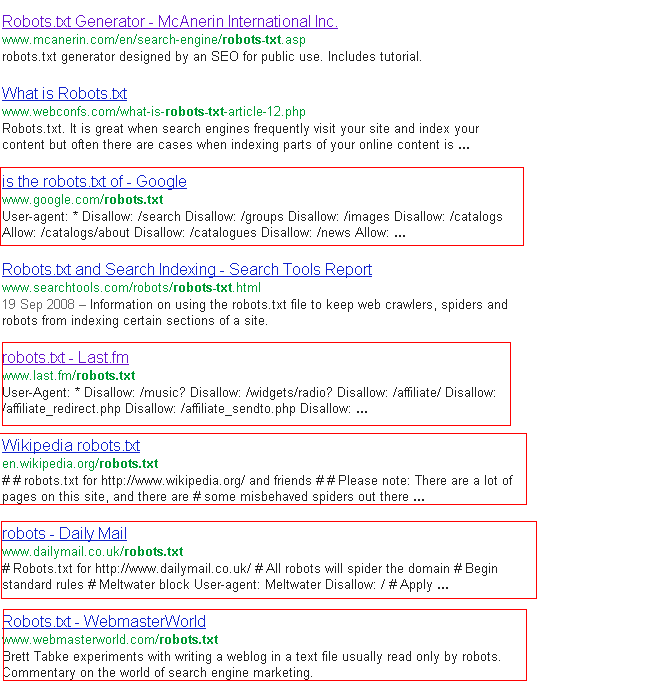

You see, Peter is not the only one dealing with this but websites like

- Dailymail

- Webmasterworld

- Last.fm

and many others… all have their robots.txt file indexed in Google.

You see, it’s simply illogical to block ‘robots.txt’ in a robots.txt file. This didn’t make any sense to me: Why does Google actually index this file and how to de-index from the search engine?

Why does Google index the robots.txt?

There can be multiple reasons why Google indexes the robots.txt file but I have figured out two as the most common reasons why search engines index particular pages and later show them as results for a query.

- Links:

Google follows links, you know it, right? From one link to another and the chain continues. When links are pointing to the robots.txt file.

It can be from external sources (different websites pointing to your robots.txt file) or internally (some page of your website that points to robots.txt file). Then Google will probably index it.

- Social signals:

The faster way to get Google’s attention to a page I know is to share it on social platforms like Twitter, Google+ and Facebook (Google currently can’t see private Facebook sharing activity).

When for some reason you or someone share your robots.txt on social sites this can be another common reason that makes Google index the page file.

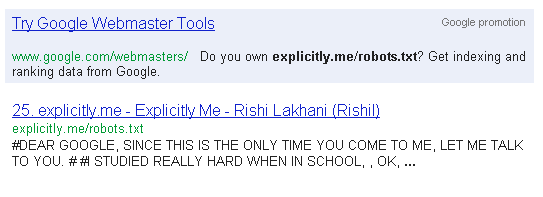

Consider Rishi Lakhani who wrote a letter to Google in his website’s robot.txt file. He shared his creative robots.txt on Twitter and it went viral. According to Shared Count, Rishi Lakhani’s robots.txt file got:

- Facebook Likes: 21

- Facebook Comments: 8

- Facebook Shares: 33

- Twitter: 1232

Now, you know why Google will probably going to index your robots.txt file so let’s talk about action now!

How to de-index the robots.txt?

Don’t link, don’t share:

This is not always in your control, especially not to make people link a specific page on websites like the “Webmasterworld” forum or Last.fm.

Theoretically though if you don’t link it and don’t share on social platforms, Google will not show it.

URL removal request:

That’s the only idea I have found, simple yet powerful and safe way to get your robots.txt file out of the Google index.

It’s great because the user’s site ownership is verified and shows even the progress for each request.

These are two of the ways I know how to deal with the above mentioned issue of the unintentionally indexed robots.txt file.

Do you have a better solution for the robots.txt indexing problem? Please share it with the community in the comment section.

Im not sure your second option, the removal request, would actually work (though I haven’t tested it). Presumably a robots.txt file would return a 200 header status, meaning that the removal request wouldn’t go through.

Therein lay my logic problem! I decided it wasn’t doing any harm and have left it, but it did find it curious! :)

you can also try using the X-Robots-Tag HTTP header specifications with “noindex” as a response.

more info here:

http://code.google.com/web/controlcrawlindex/docs/robots_meta_tag.html

it’s easy to set it up via .htaccess. i’ve tried this on several sites. for some of them it worked, for others it didn’t…

Peter: Can’t you modify/manipulate the header status? Btw. I just submitted a removal request for my own robots.txt which is empty anyways.

Bogdan: Thank you for the feedback! Why do you think it didn’t work on some sites?

@tad

I don’t know exactly why it didn’t work. It is possible that eventually all my robots.txt files will be dropped out of the index. Might be a matter of time. I’m not sure.

Google says however that:

“by default pages are treated as crawlable, indexable, archivable, and their content is approved for use in snippets that show up in the search results, unless permission is specifically denied in a robots meta tag or X-Robots-Tag.”

OK Bogdan, thank you for the feedback. On a sidenote: The test with the removal request of my own robots.txt has worked. It’s now de-indexed:

https://www.google.com/search?q=site%3Ahttp%3A%2F%2Fseo2.0.onreact.com%2Frobots.txt

interesting test – was that with changing the header status of your robots.txt? I’d be loath to do that, as I want them to respect it, and if it were to 404 error, then I would have concerns that it wouldnt respect the items I am blocking in there.

When I get time (I’ve just got back from some holiday) I’ll test a removal request on the one that made me observe this

No Peter, I just did the removal request in Google Webmaster Tools as advised by Moosa.

Wow! Thank you for your comments people!

Peter as we discussed on twitter I assumed robots.txt is a normal page and submitted my URL removal request on Google and it worked out! I tried this on two different sites.

Bogdan, this is interesting! Thanks for the idea. I’ll look into it and will update, maybe your idea works better than mine…

Thanks to Tad for accepting my content and editing it for the readers!

i think URL removal request is the best way out of this problem and the link you posted about robots.txt of rishi lakahani’s website was amazing

Hi there, I noticed that you are linking to Sharedcount.com on your article http://seo2.0.onreact.com/does-google-index-your-robots-txt . As that service is being discontinued, perhaps it could be useful to replace the link for Sharescount.com, a new working alternative. Keep up the great work!